Elasticsearch篇之聚合分析入门

什么是聚合分析?

- 搜索引擎用来回答如下问题:

- 请告诉我地址为上海的所有订单?

- 请告诉我最近一天内创建但没有付款的所有订单?

- 聚合分析可以回答如下问题:

- 请告诉我最近一周每天的订单成交量有多少?

- 请告诉我最近一个月每天的平均订单金额是多少?

- 请告诉我最近半年卖的最火的前五个商品是哪些?

聚合分析

聚合分析, 英文名为Aggregation, 是es除了搜索功能外提供的针对es数据做统计分析的功能

- 功能丰富, 提供Bucket, Metric, Pipeline等多种分析方式, 可以满足大部分的分析需求

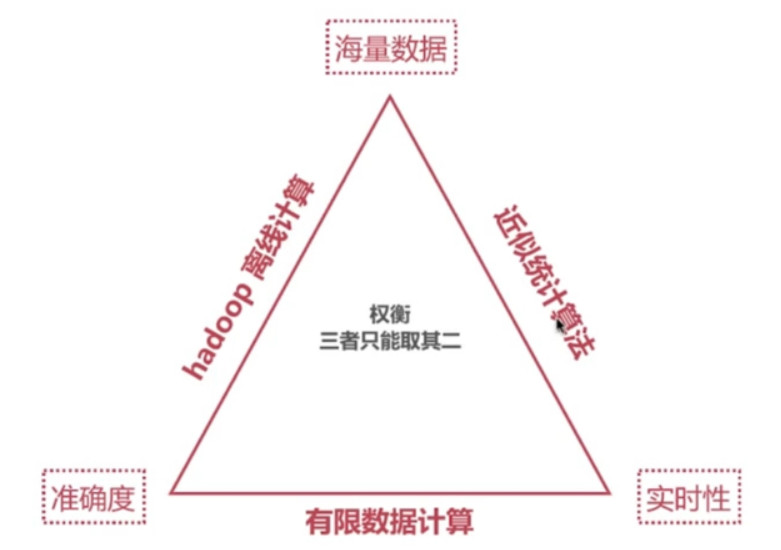

- 实用性高, 所有的计算结果都是即时返回的, 而Hadoop等大数据系统一般都是T + 1级别的

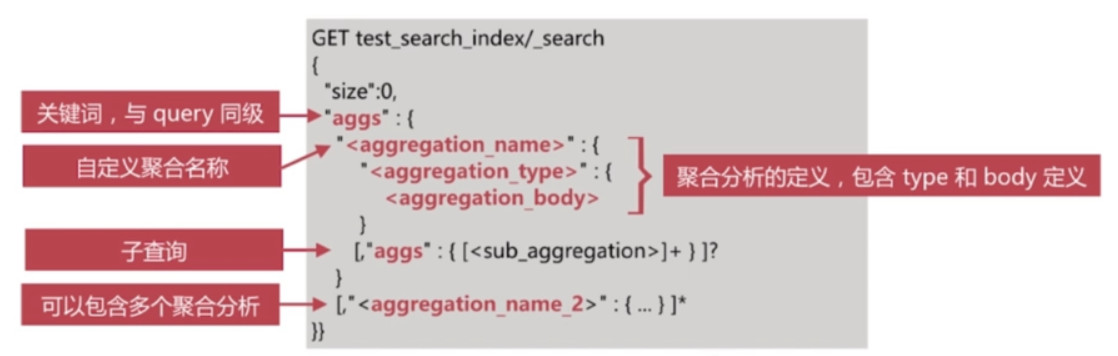

聚合分析作为search的一部分, api如下所示:

聚合分析示例用到的索引及其数据:

# aggregation POST test_search_index/doc/_bulk {"index":{"_id":"1"}} {"username":"alfred way","job":"java engineer","age":18,"birth":"1990-01-02","isMarried":false,"salary":10000} {"index":{"_id":"2"}} {"username":"tom","job":"java senior engineer","age":28,"birth":"1980-05-07","isMarried":true,"salary":30000} {"index":{"_id":"3"}} {"username":"lee","job":"ruby engineer","age":22,"birth":"1985-08-07","isMarried":false,"salary":15000} {"index":{"_id":"4"}} {"username":"Nick","job":"web engineer","age":23,"birth":"1989-08-07","isMarried":false,"salary":8000} {"index":{"_id":"5"}} {"username":"Niko","job":"web engineer","age":18,"birth":"1994-08-07","isMarried":false,"salary":5000} {"index":{"_id":"6"}} {"username":"Michell","job":"ruby engineer","age":26,"birth":"1987-08-07","isMarried":false,"salary":12000}示例:

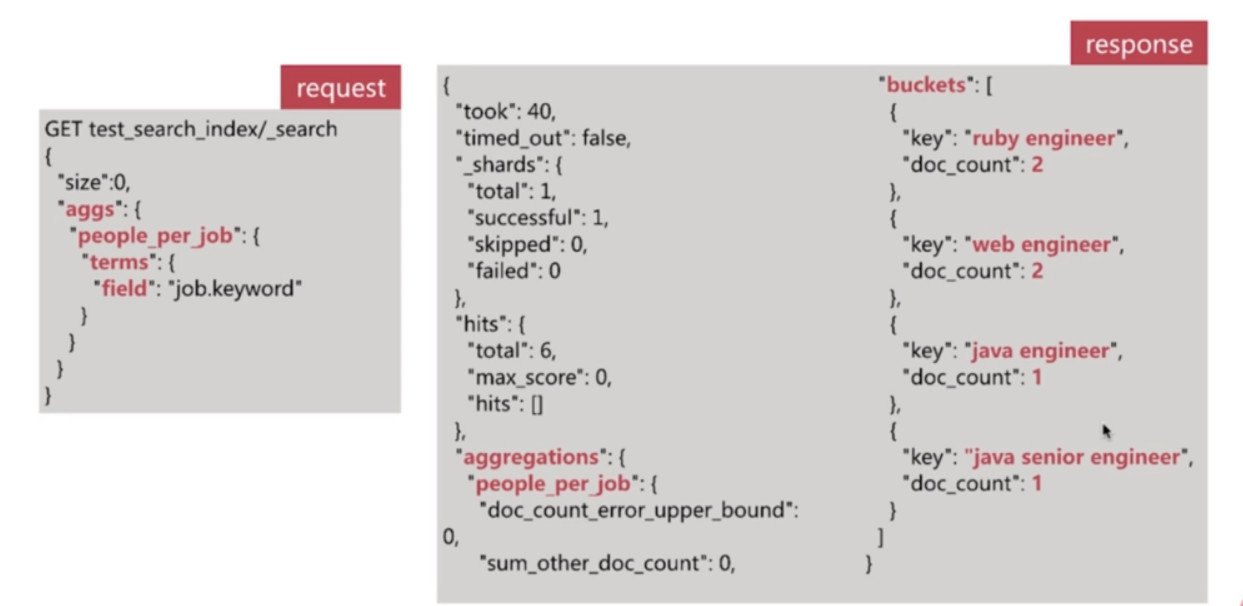

请告诉我公式目前在职人员工作岗位分布情况?

聚合分析的分类

- 为了便于理解, es将聚合分析主要分为如下4类:

- Bucket 分桶类型, 类似SQL中的Group BY语法

- Metric 指标分析类型, 如计算最大值, 最小值, 平均值等等

- Pipeline 管道分析类型, 基于上一级的聚合分析结果进行再分析

- Matrix 矩阵分析类型

Metric聚合分析

- 主要分如下两类:

- 单值分析, 只输出一个分析结果

- min, max, avg, sum

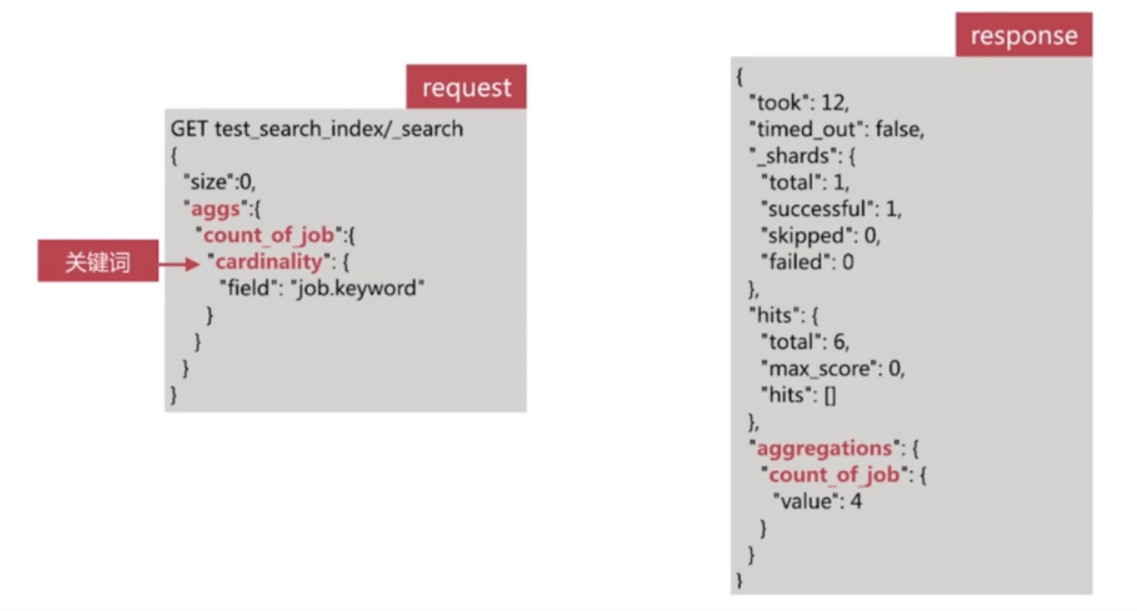

- cardinality

- 多值分析, 输出多个分析结果

- stats, extended stats

- percentile, percentile rank

- top hits

- 单值分析, 只输出一个分析结果

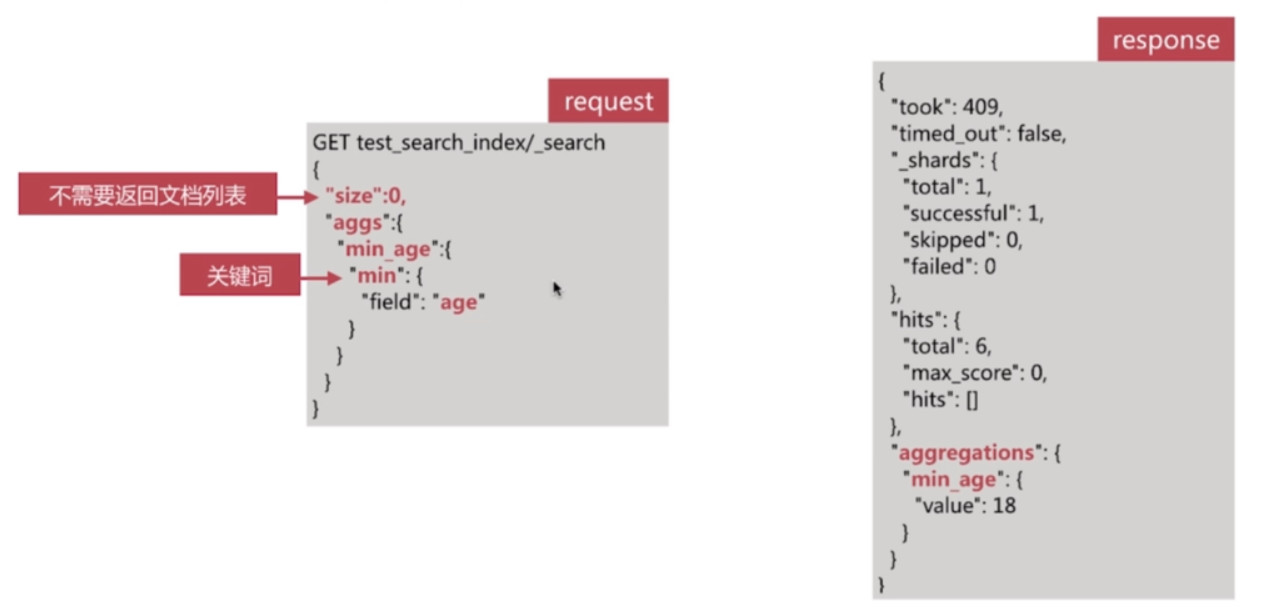

Min

返回数值类字段的最小值

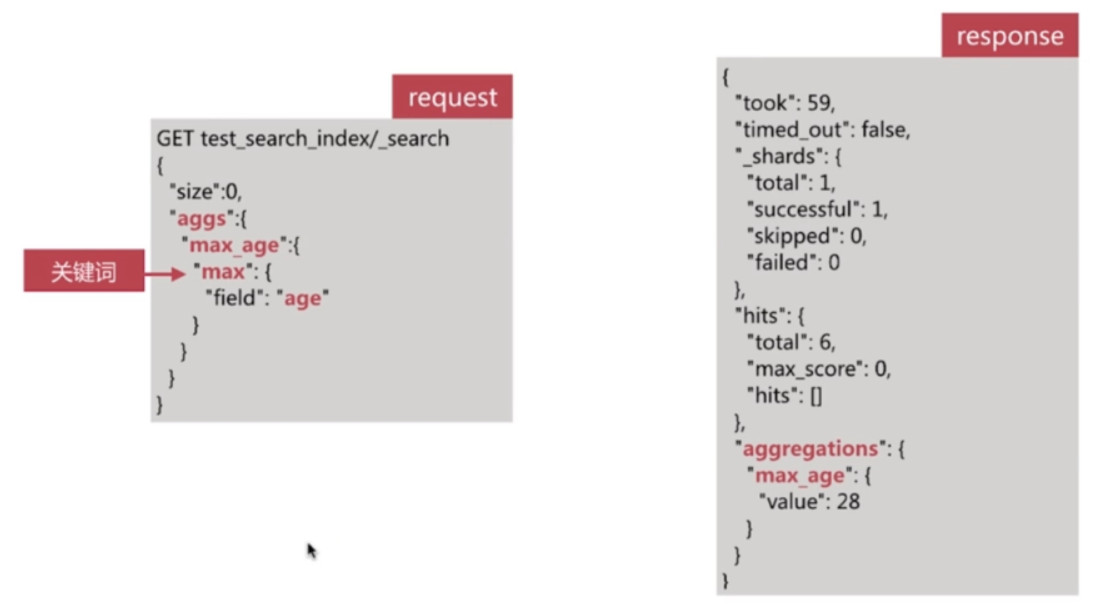

Max

- 返回数值类字段的最大值

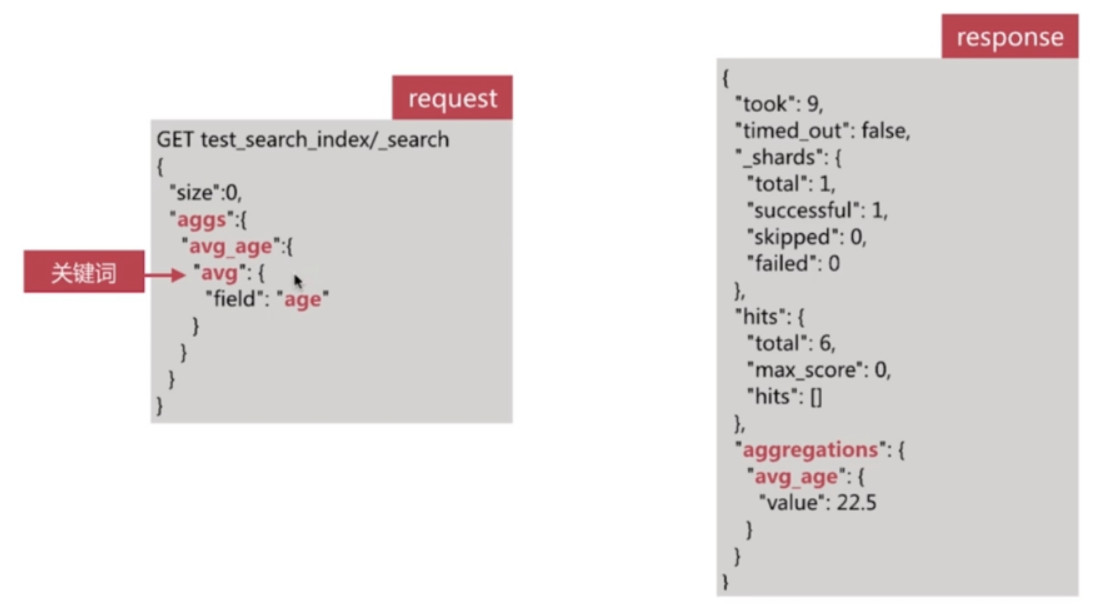

Avg

返回数值类字段的平均值

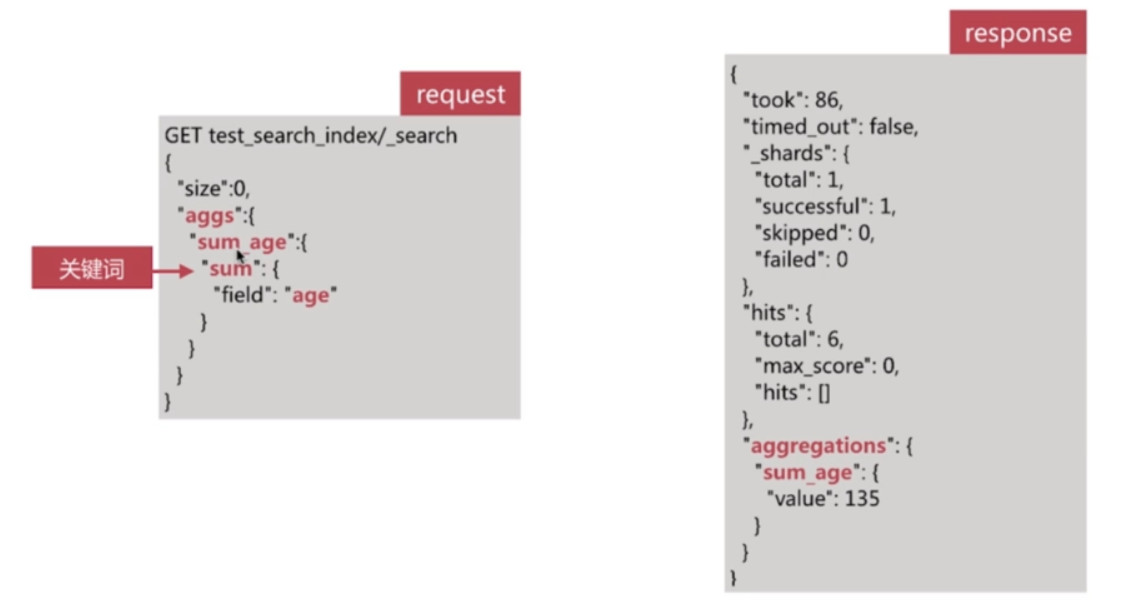

Sum

- 返回数值类字段的总和

一次返回多个聚合结果

示例:

# request

GET /test_search_index/_search

{

"size": 0,

"aggs": {

"max_age": {

"max": {

"field": "age"

}

},

"mix_age": {

"min": {

"field": "age"

}

},

"avg_age": {

"avg": {

"field": "age"

}

},

"sum_age": {

"sum": {

"field": "age"

}

}

}

}

# response

{

"took": 71,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 6,

"max_score": 0,

"hits": []

},

"aggregations": {

"max_age": {

"value": 28

},

"avg_age": {

"value": 22.5

},

"mix_age": {

"value": 18

},

"sum_age": {

"value": 135

}

}

}Cardinality

Cardinality, 意为集合的势, 或者是基数, 是指不同数值的个数, 类似SQL中的distinct count概念

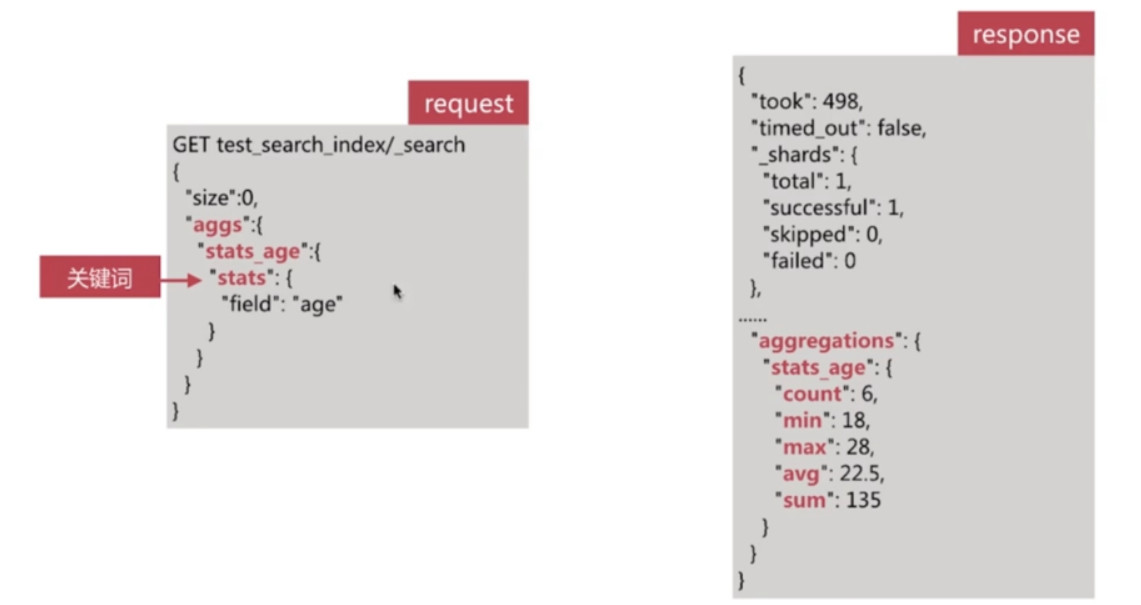

Stats

返回一系列数值类型的统计值, 包含min, max, avg, sum和count

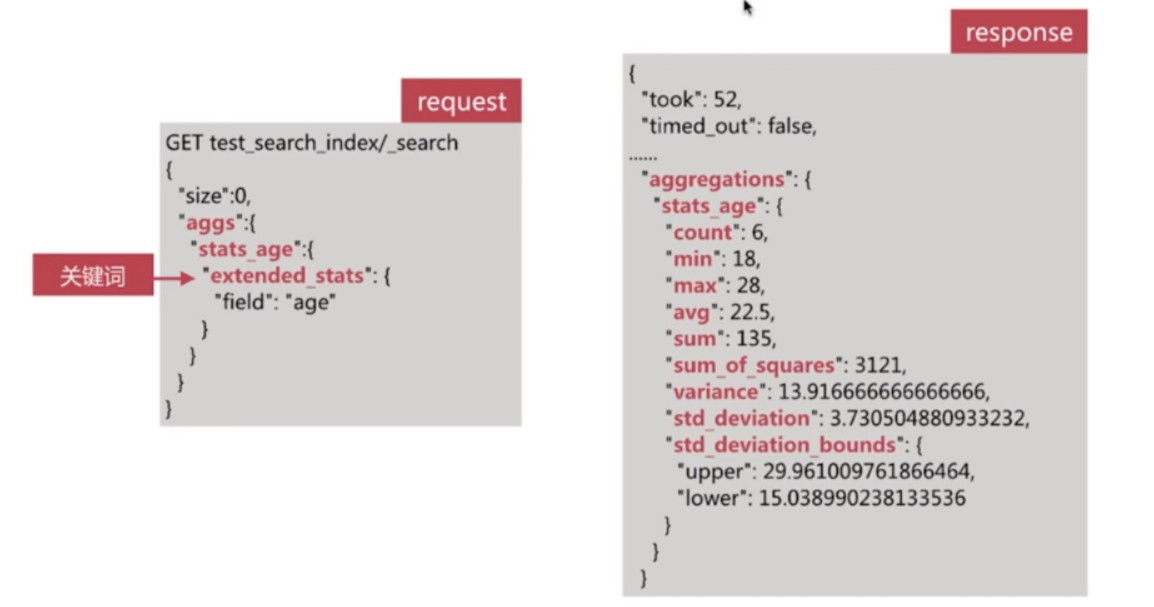

Extended Stats

对stats的扩展, 包含了更多的统计数据, 如方差, 标准差等

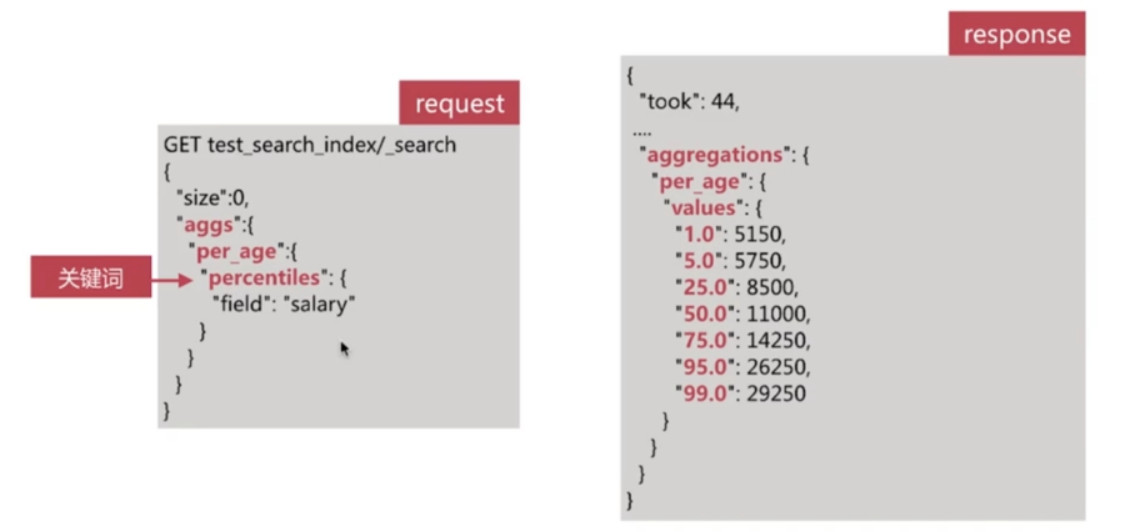

Percentile

百分位数统计

示例:

# request GET /test_search_index/_search { "size": 0, "aggs": { "pertile_age": { "percentiles": { "field": "age", "percents": [ 1, 5, 25, 50, 75, 95, 99 ] } } } } # response { "took": 359, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 6, "max_score": 0, "hits": [] }, "aggregations": { "pertile_age": { "values": { "1.0": 17.999999999999996, "5.0": 18, "25.0": 19, "50.0": 22.5, "75.0": 25.25, "95.0": 27.5, "99.0": 27.9 } } } }

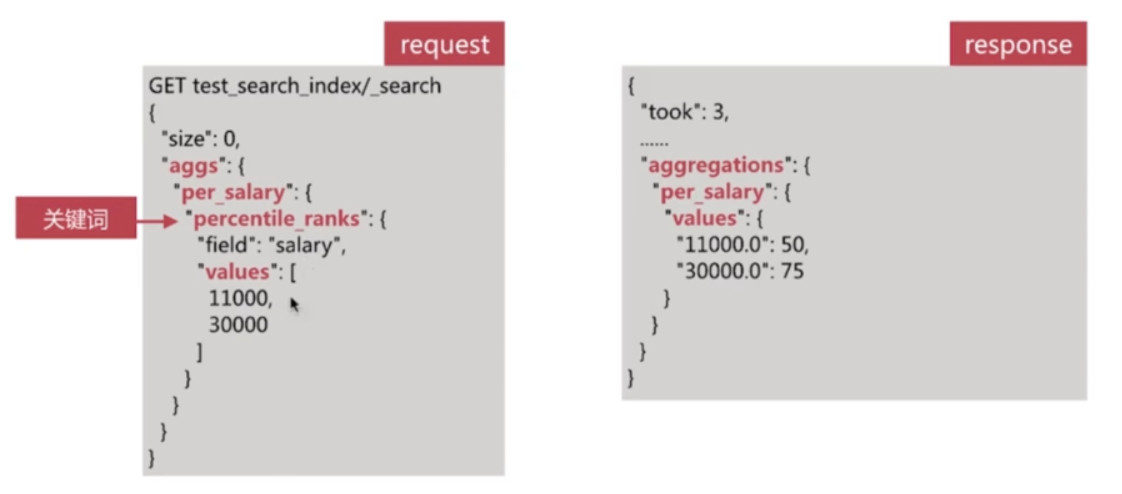

Percentile Rank

百分位数统计

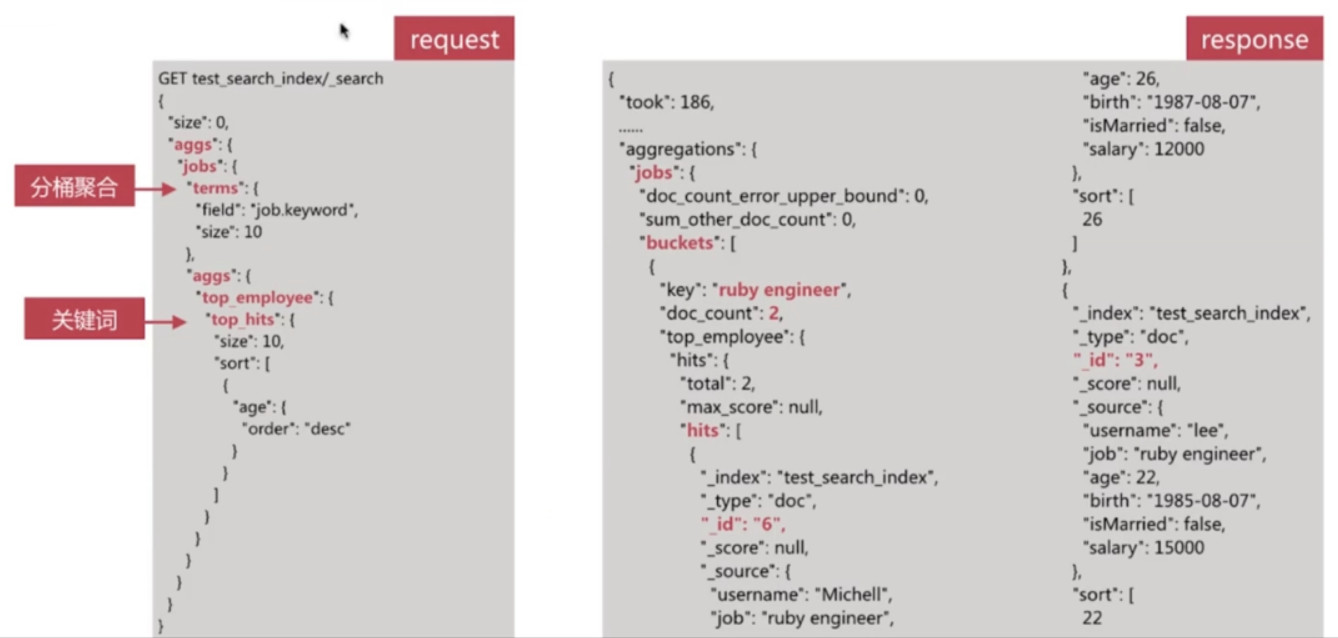

Top Hits

一般用于分桶后获取该桶内最匹配的顶部文档列表, 即详情数据

Bucket聚合分析

Bucket, 意为桶, 即按照一定的规则文档将文档分配到不同的桶中, 即达到分析的目的

按照Bucket的分桶策略, 常见的Bucket聚合分析如下:

- Terms

- Range

- Date Range

- Histogram

- Date Histogram

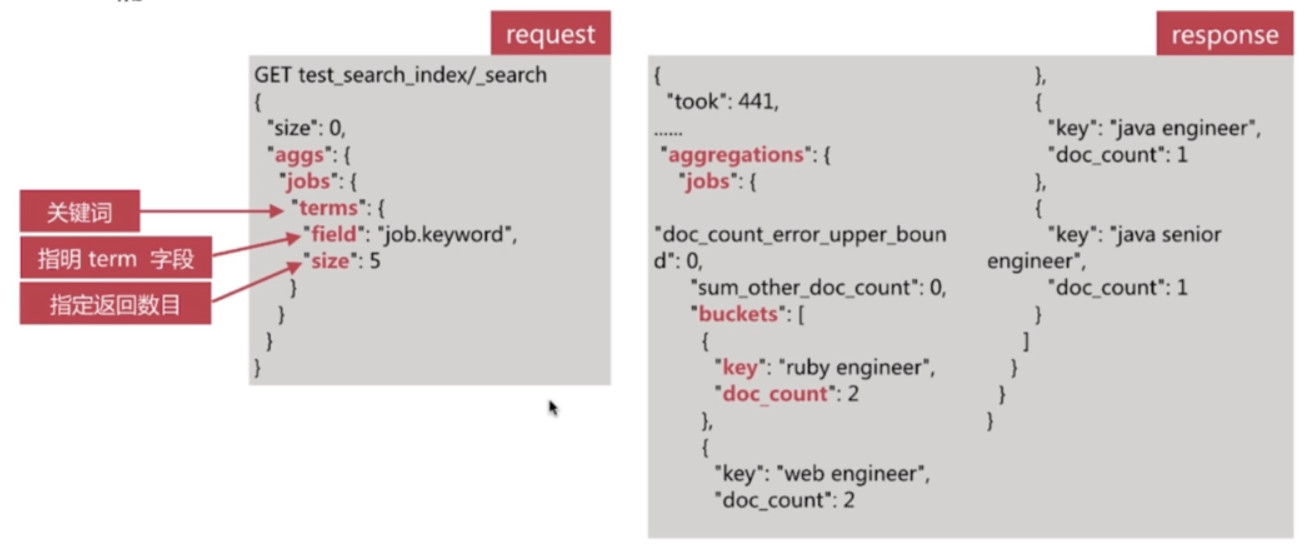

Terms

该分桶策略最简单, 直接按照term来分桶, 如果是text类型, 则按照分词后的结果分桶(注意fielddata需开启)

示例:

# request

# 该演示fielddata开启和关闭两种情况的terms分桶策略

GET /test_search_index

# response

# 由下可见 test_search_index 索引的job字段的"fielddata": true

# username字段的"fielddata": false (未显示即为false)

{

"test_search_index": {

"aliases": {},

"mappings": {

"doc": {

"properties": {

"age": {

"type": "long"

},

"birth": {

"type": "date"

},

"isMarried": {

"type": "boolean"

},

"job": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

},

"fielddata": true

},

"salary": {

"type": "long"

},

"username": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

}

}

}

},

"settings": {

"index": {

"creation_date": "1586715804177",

"number_of_shards": "5",

"number_of_replicas": "1",

"uuid": "FiiZo7rFQMCXRqwThmSlEw",

"version": {

"created": "6010199"

},

"provided_name": "test_search_index"

}

}

}

}

# 接下来对该两个字段进行terms分桶操作

# request

# 对username字段进行terms分桶操作

GET /test_search_index/_search

{

"size": 0,

"aggs": {

"terms_username": {

"terms": {

"field": "username",

"size": 10

}

}

}

}

# response

# 可见由于username字段未开启Fielddata, 就报错误信息

{

"error": {

"root_cause": [

{

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [username] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

],

"type": "search_phase_execution_exception",

"reason": "all shards failed",

"phase": "query",

"grouped": true,

"failed_shards": [

{

"shard": 0,

"index": "test_search_index",

"node": "WIUsESqiTZqLs_4nQTvbeQ",

"reason": {

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [username] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

}

]

},

"status": 400

}

# request

# 对job字段进行terms分桶操作

GET /test_search_index/_search

{

"size": 0,

"aggs": {

"terms_job": {

"terms": {

"field": "job",

"size": 10

}

}

}

}

# response

# 由于job字段开启了Fielddata, 即可以正确进行terms分桶操作

{

"took": 153,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 6,

"max_score": 0,

"hits": []

},

"aggregations": {

"terms_job": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": "engineer",

"doc_count": 6

},

{

"key": "java",

"doc_count": 2

},

{

"key": "ruby",

"doc_count": 2

},

{

"key": "web",

"doc_count": 2

},

{

"key": "senior",

"doc_count": 1

}

]

}

}

}Range

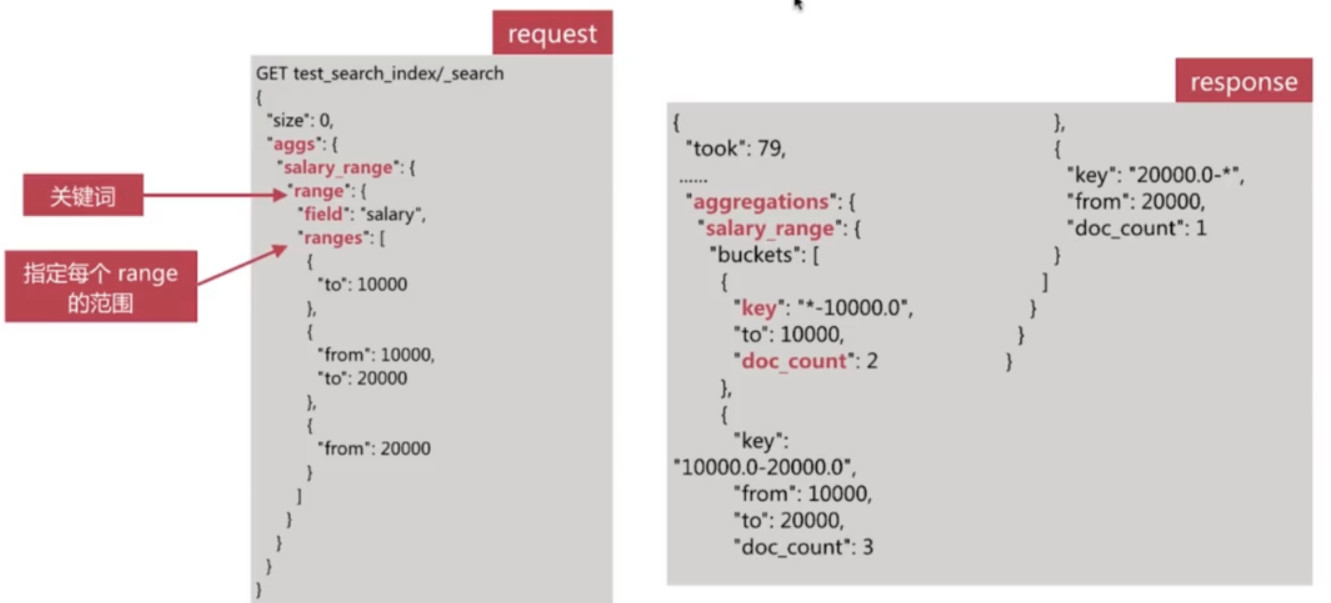

通过制定数值的范围来设定分桶规则

示例:

# request # 当指定key时, es就不会生成默认的key GET /test_search_index/_search { "size": 0, "aggs": { "salary_range": { "range": { "field": "salary", "ranges": [ { "key": "glt 10000", "from": 0, "to": 10000 }, { "key": "glt 20000", "from": 10000, "to": 20000 }, { "key": "glt 30000", "from": 20000, "to": 30000 } ] } } } } # response { "took": 53, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 6, "max_score": 0, "hits": [] }, "aggregations": { "salary_range": { "buckets": [ { "key": "glt 10000", "from": 0, "to": 10000, "doc_count": 2 }, { "key": "glt 20000", "from": 10000, "to": 20000, "doc_count": 3 }, { "key": "glt 30000", "from": 20000, "to": 30000, "doc_count": 0 } ] } } }

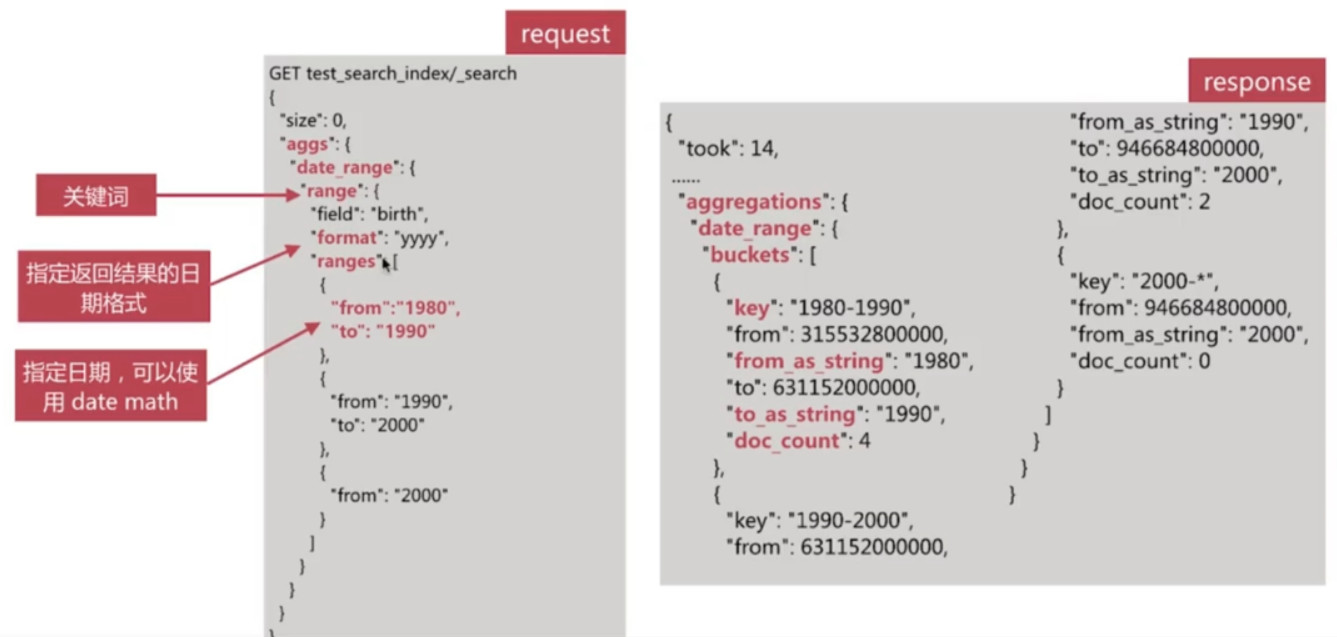

Date Range

通过指定日期范围来设定分桶规则

示例:

# request GET /test_search_index/_search { "size": 0, "aggs": { "birth_range": { "date_range": { "field": "birth", "format": "yyyy", "ranges": [ { "from": "1980", "to": "1990" }, { "from": "1900", "to": "2000" } ] } } } } # response { "took": 153, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 6, "max_score": 0, "hits": [] }, "aggregations": { "birth_range": { "buckets": [ { "key": "1900-2000", "from": -2208988800000, "from_as_string": "1900", "to": 946684800000, "to_as_string": "2000", "doc_count": 6 }, { "key": "1980-1990", "from": 315532800000, "from_as_string": "1980", "to": 631152000000, "to_as_string": "1990", "doc_count": 4 } ] } } }

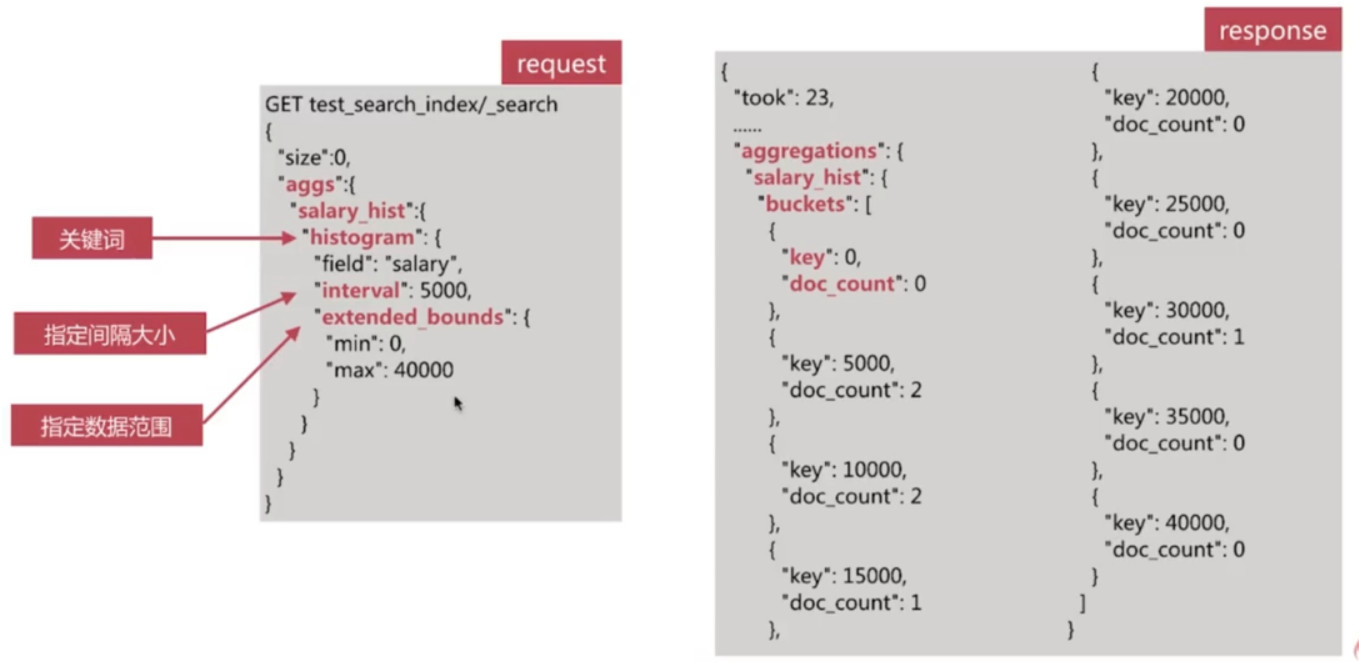

Histogram

- 直方图, 以固定间隔的策略来分割数据

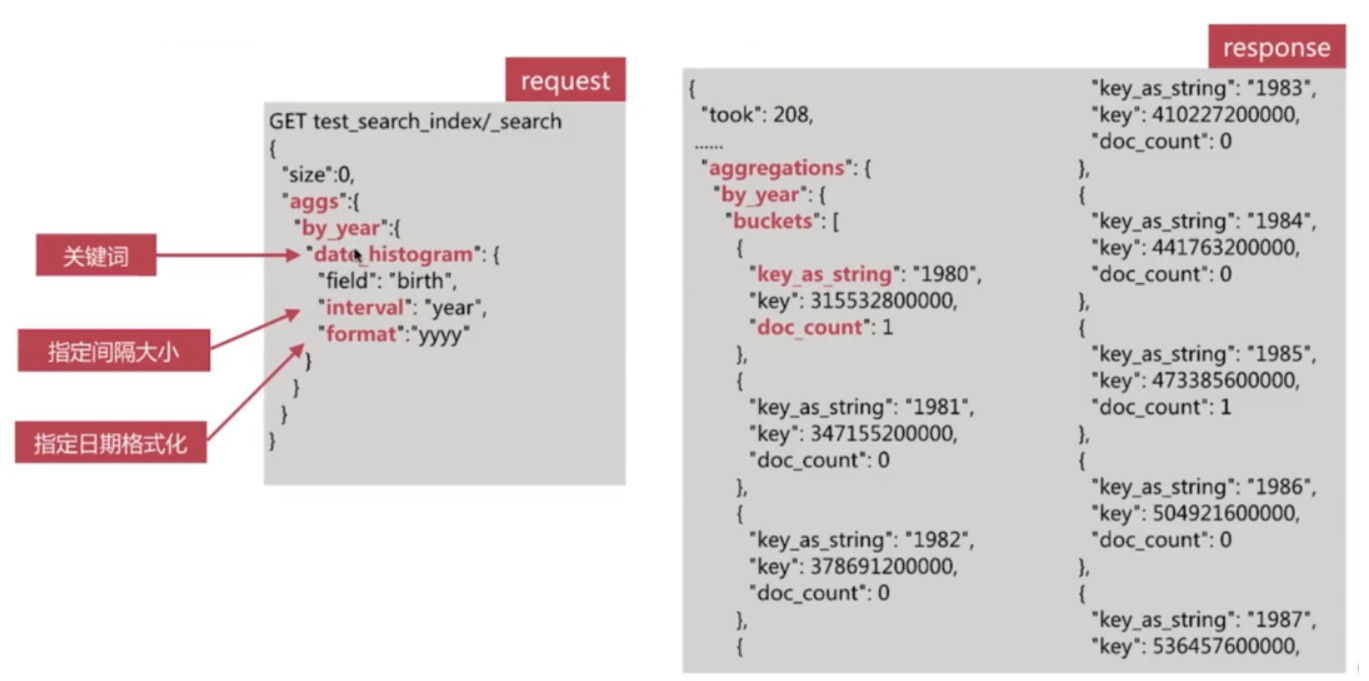

Date Histogram

针对日期的直方图或者柱状图, 是时序数据分析中常用的聚合分析类型

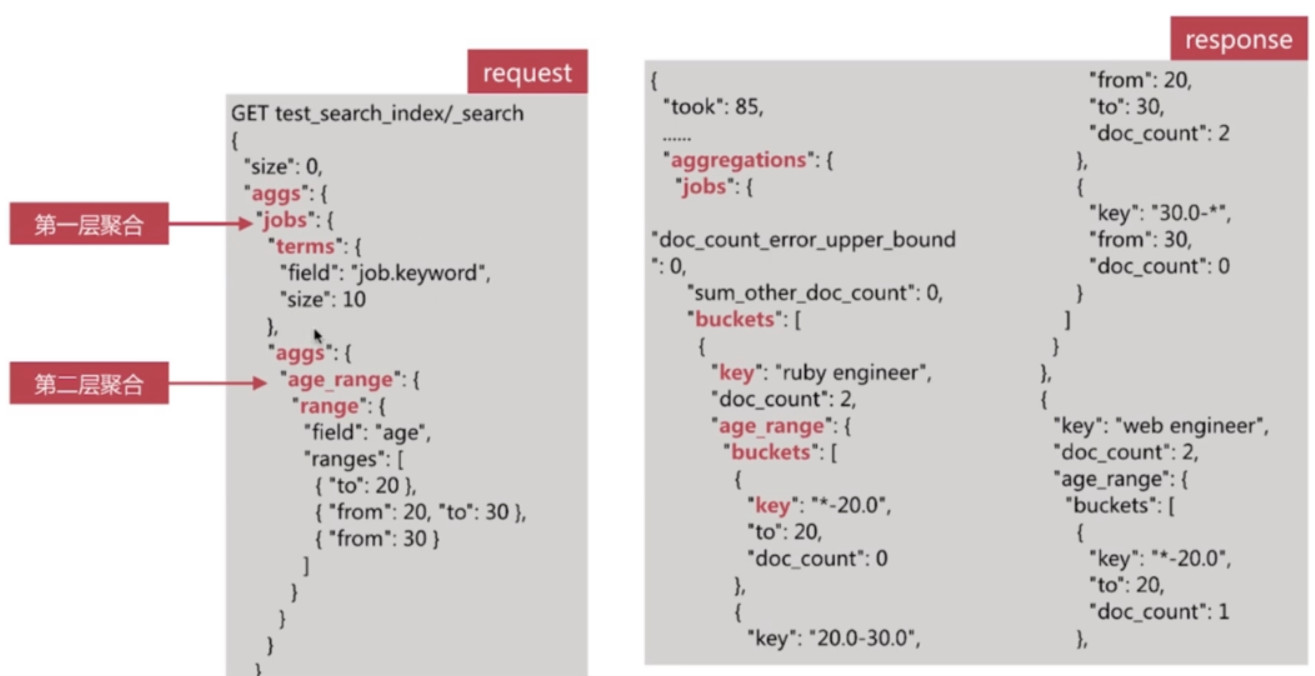

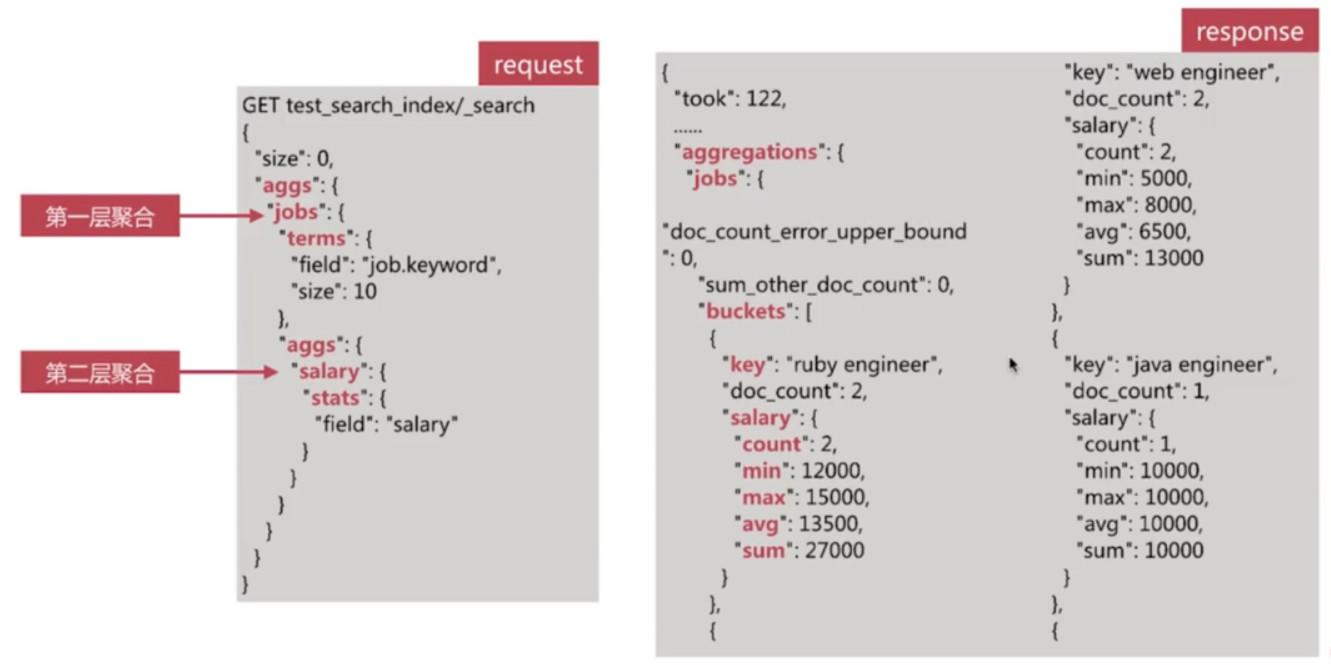

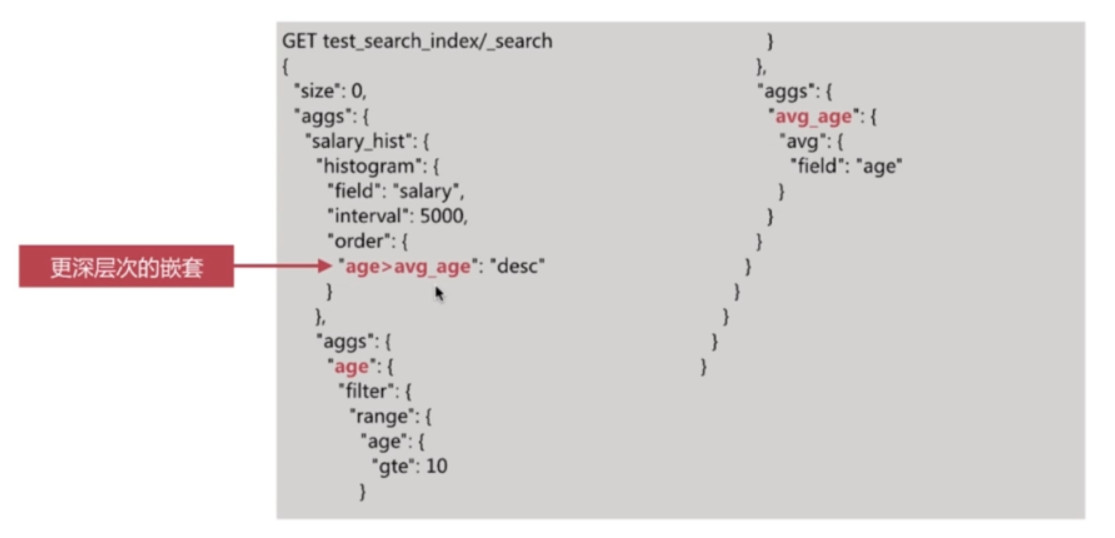

Bucket + Metric聚合分析

Bucket 聚合分析允许通过添加子分析来进一步进行分析, 该子分析可以是Bucket也可以是Metric.这也使得es的聚合分析能力变得异常强大

示例:

分桶之后再分桶:

分桶之后进行数据分析:

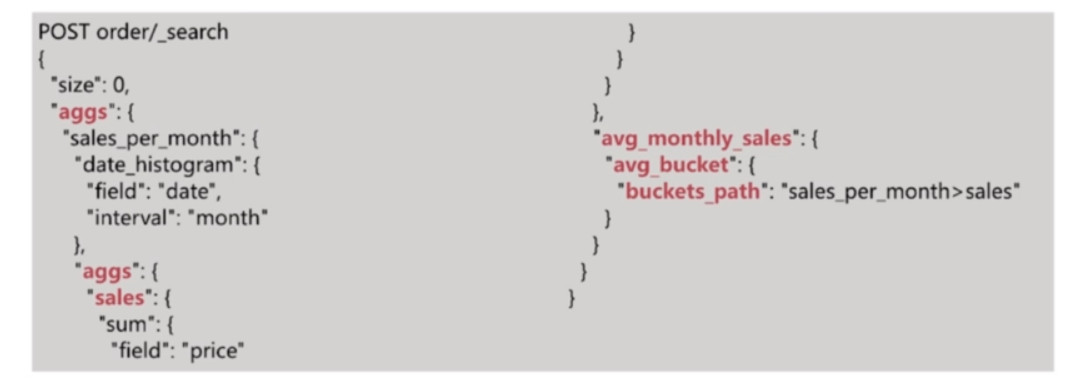

Pipeline聚合分析

针对聚合分析的结果再次进行聚合分析, 而且支持链式调用, 可以回答如下问题:

- 订单的月平均销售额是多少?

Pipeline聚合分析分类

- Pipeline的分析结果会输出到原结果中, 根据输出位置不同, 分为以下两类:

- Parent 结果内嵌到现有的聚合分析结果中

- Derivative

- Moving Average

- Cumulative Sum

- Sibling 结果与现有聚合分析结果同级

- Max/Min/Avg/Sum Bucket

- Stats/Extended Stats Bucket

- Percentiles Bucket

- Parent 结果内嵌到现有的聚合分析结果中

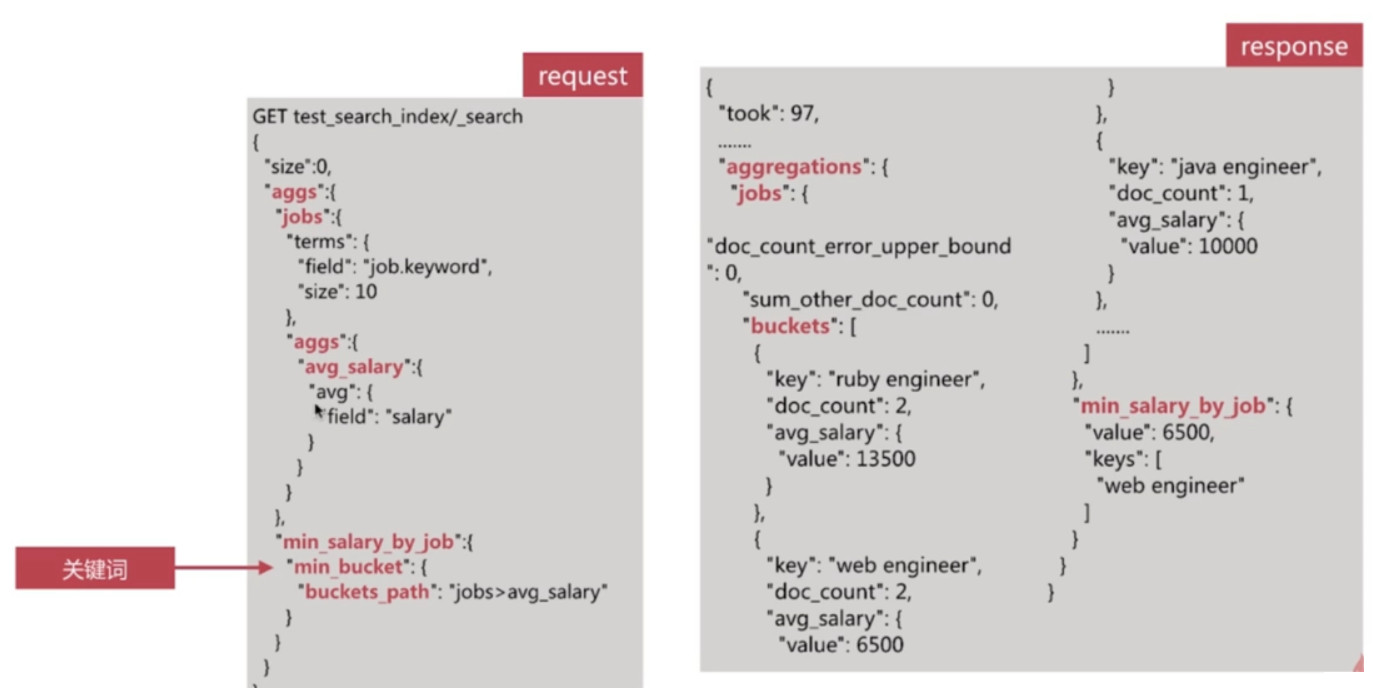

Pipeline聚合分析-Sibling

Min Bucket

找出所有Bucket中值最小的Bucket名称和值

示例:

# request

GET /test_search_index/_search

{

"size": 0,

"aggs": {

"job_terms": {

"terms": {

"field": "job.keyword"

},

"aggs": {

"avg_salary": {

"avg": {

"field": "salary"

}

}

}

},

"min_avg_salary": {

"min_bucket": {

"buckets_path": "job_terms>avg_salary"

}

}

}

}

# response

{

"took": 57,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 6,

"max_score": 0,

"hits": []

},

"aggregations": {

"job_terms": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": "ruby engineer",

"doc_count": 2,

"avg_salary": {

"value": 13500

}

},

{

"key": "web engineer",

"doc_count": 2,

"avg_salary": {

"value": 6500

}

},

{

"key": "java engineer",

"doc_count": 1,

"avg_salary": {

"value": 10000

}

},

{

"key": "java senior engineer",

"doc_count": 1,

"avg_salary": {

"value": 30000

}

}

]

},

"min_avg_salary": {

"value": 6500,

"keys": [

"web engineer"

]

}

}

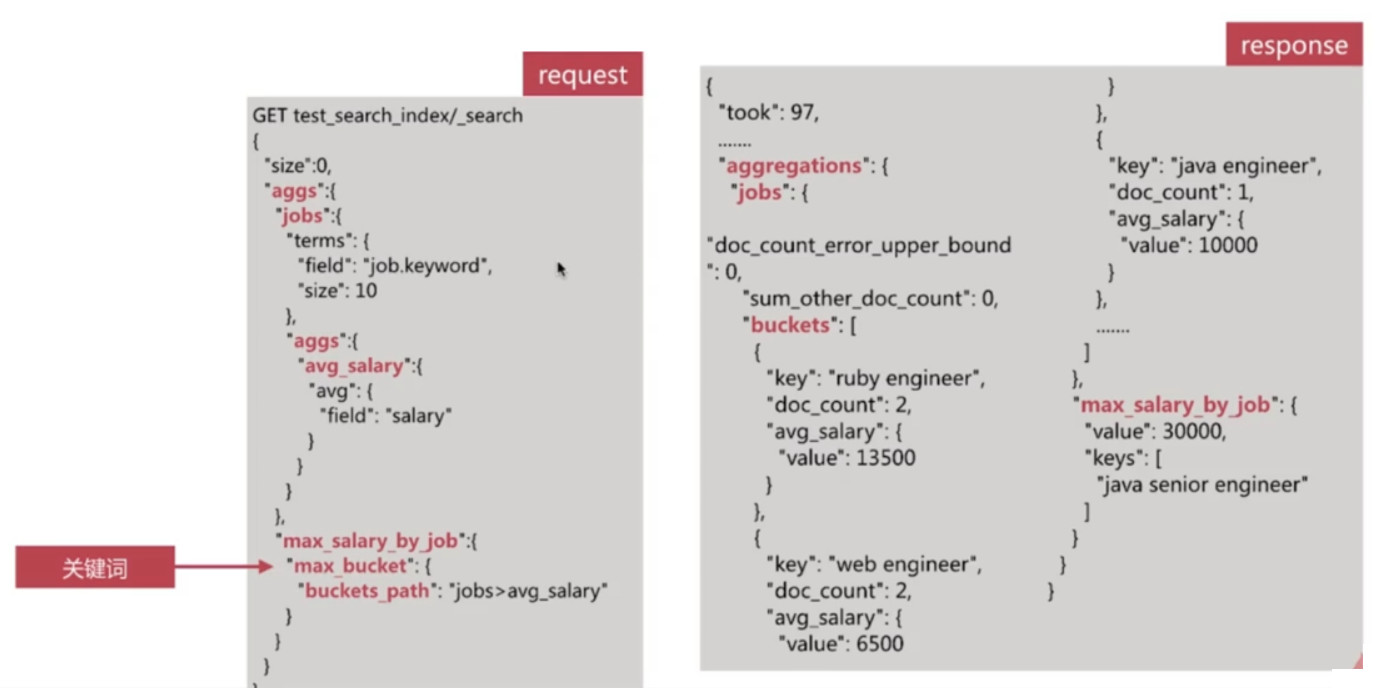

}Max Bucket

- 找出所有Bucket中值最大的Bucket名称和值

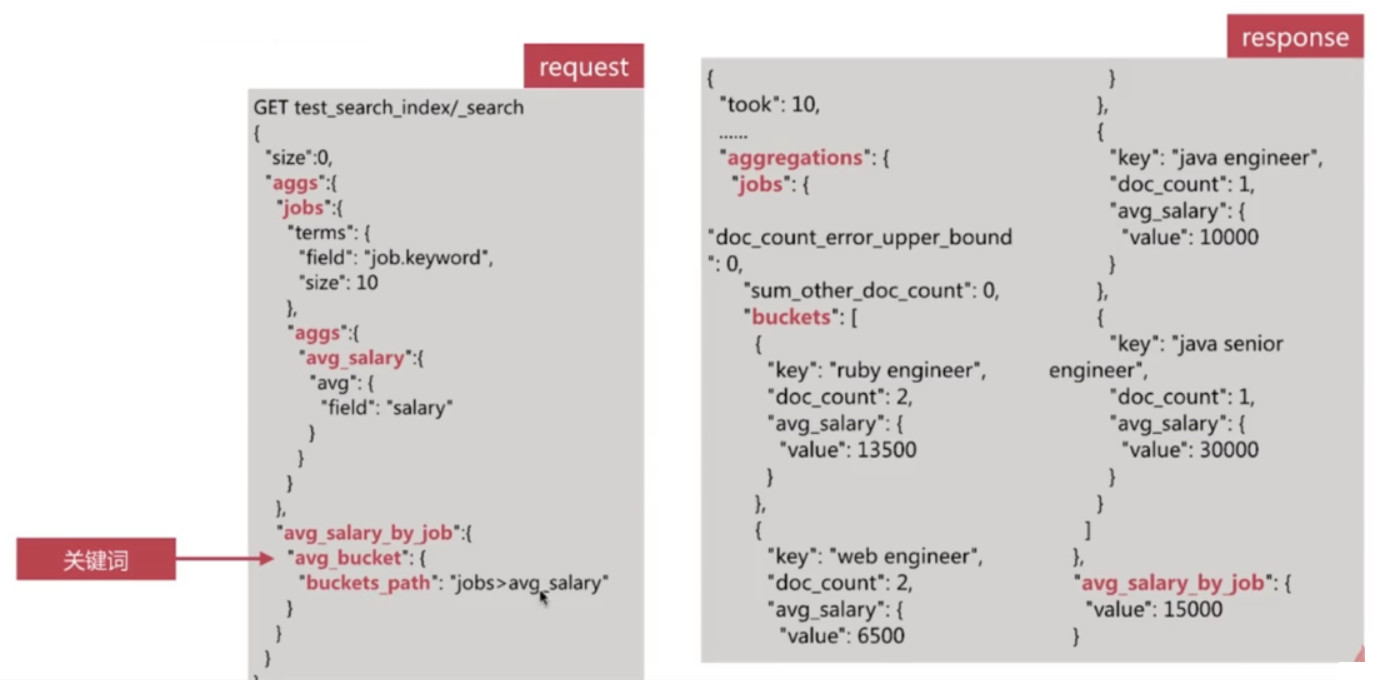

Avg Bucket

计算所有Bucket的平均值

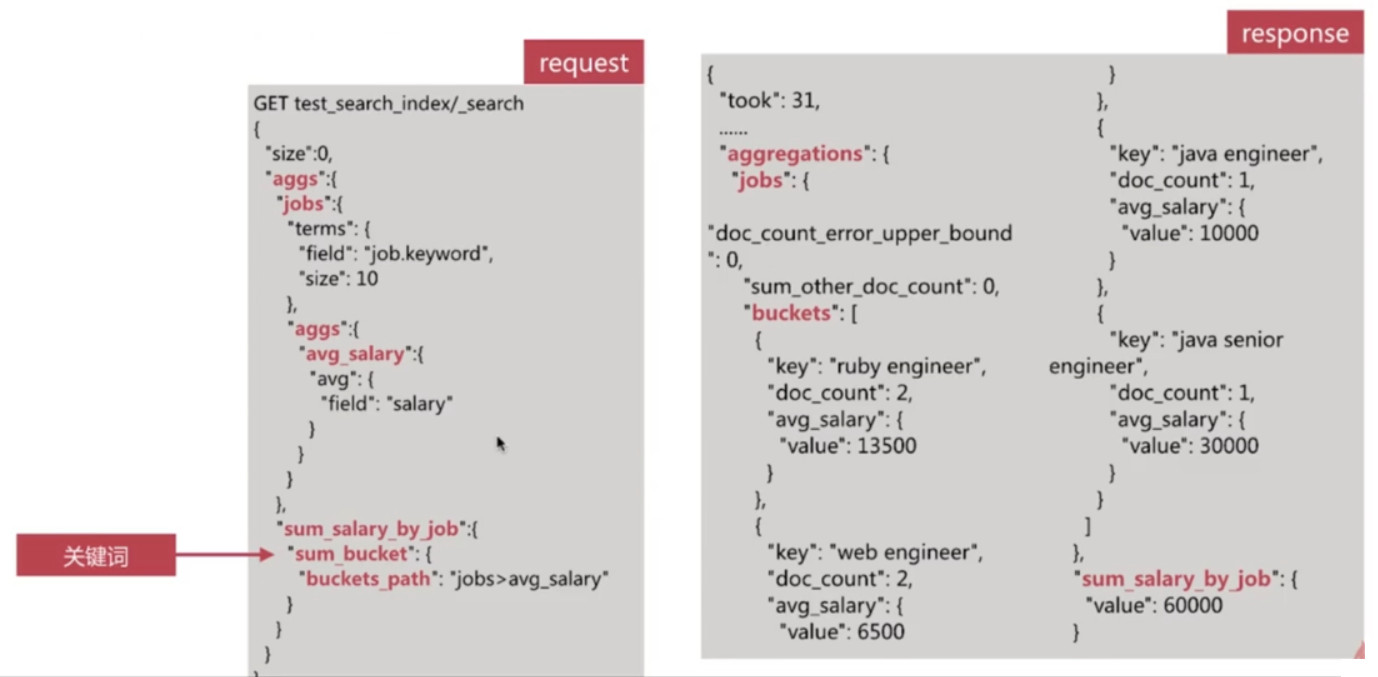

Sum Bucket

计算所有Bucket值的总和

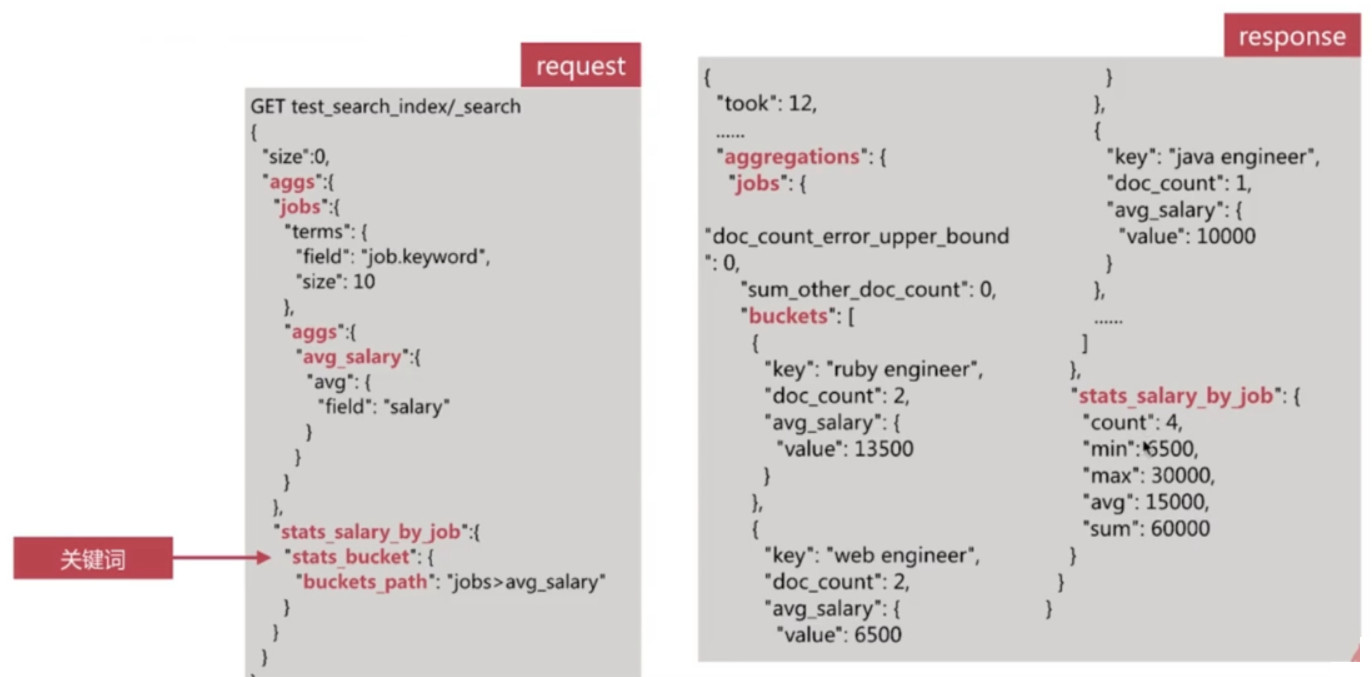

Stats Bucket

计算所有Bucket值的Stats分析

示例:

# request GET /test_search_index/_search { "size": 0, "aggs": { "job_terms": { "terms": { "field": "job.keyword" }, "aggs": { "avg_salary": { "avg": { "field": "salary" } } } }, "stats_avg_salary": { "stats_bucket": { "buckets_path": "job_terms>avg_salary" } } } } # response { "took": 45, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 6, "max_score": 0, "hits": [] }, "aggregations": { "job_terms": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "ruby engineer", "doc_count": 2, "avg_salary": { "value": 13500 } }, { "key": "web engineer", "doc_count": 2, "avg_salary": { "value": 6500 } }, { "key": "java engineer", "doc_count": 1, "avg_salary": { "value": 10000 } }, { "key": "java senior engineer", "doc_count": 1, "avg_salary": { "value": 30000 } } ] }, "stats_avg_salary": { "count": 4, "min": 6500, "max": 30000, "avg": 15000, "sum": 60000 } } }

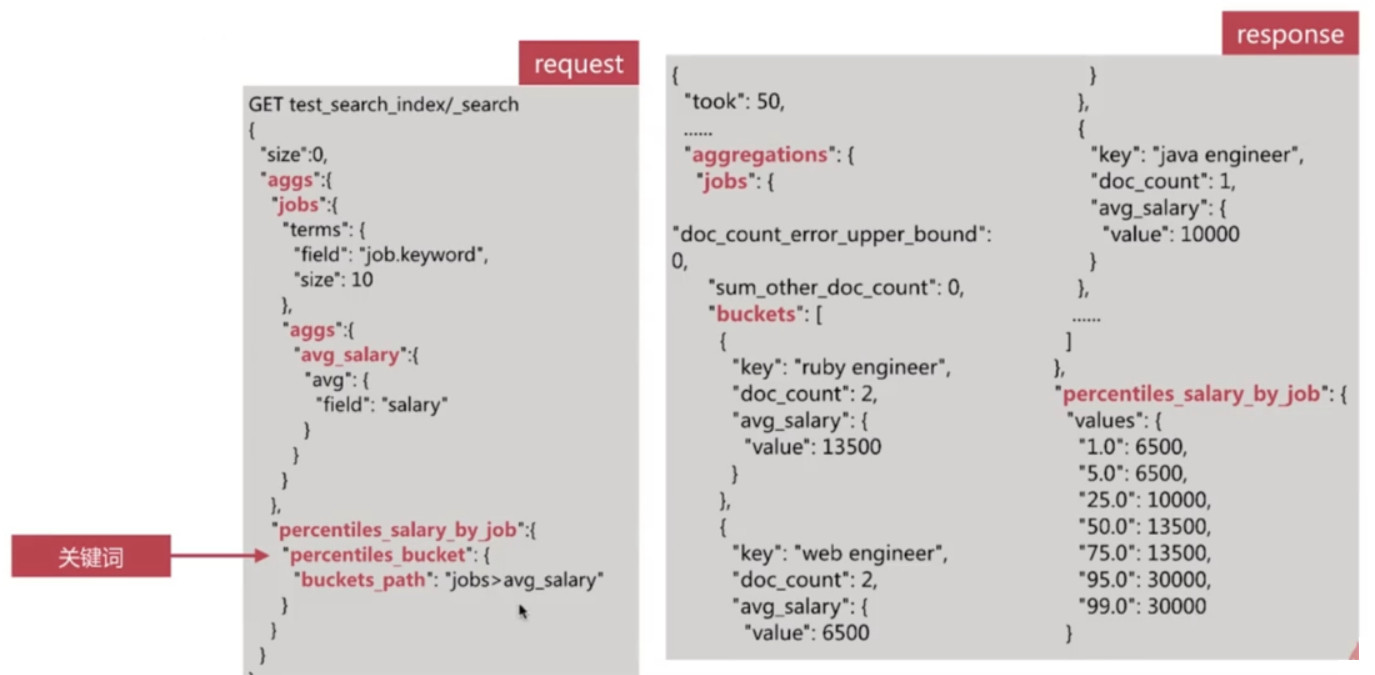

Percentiles Bucket

计算所有Bucket值的百分数

Pipeline聚合分析-Parent

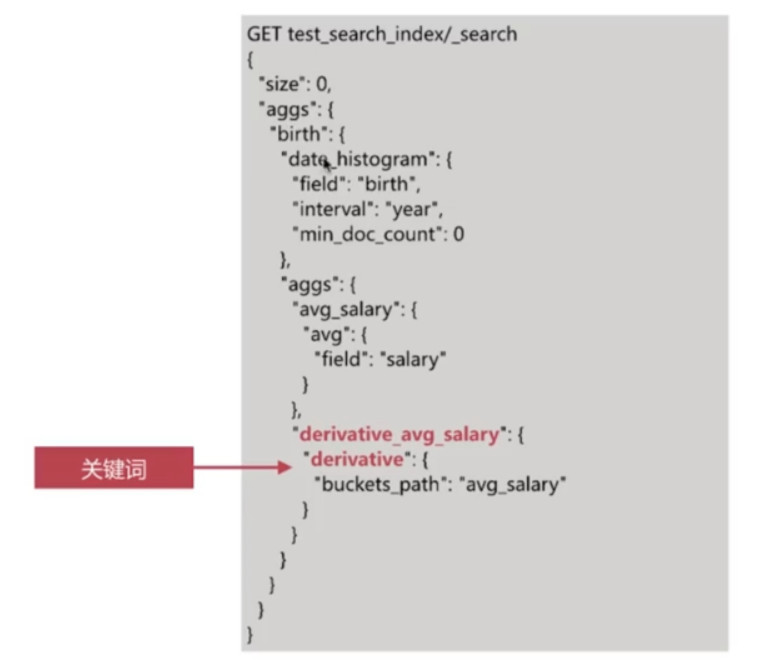

Derivative

计算Bucket值的导数

# request

GET /test_search_index/_search

{

"size": 0,

"aggs": {

"birth_histogram": {

"date_histogram": {

"field": "birth",

"interval": "year"

},

"aggs": {

"avg_salary": {

"avg": {

"field": "salary"

}

},

"derivative_avg_salary": {

"derivative": {

"buckets_path": "avg_salary"

}

}

}

}

}

}

# response

{

"took": 155,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 6,

"max_score": 0,

"hits": []

},

"aggregations": {

"birth_histogram": {

"buckets": [

{

"key_as_string": "1980-01-01T00:00:00.000Z",

"key": 315532800000,

"doc_count": 1,

"avg_salary": {

"value": 30000

}

},

{

"key_as_string": "1981-01-01T00:00:00.000Z",

"key": 347155200000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1982-01-01T00:00:00.000Z",

"key": 378691200000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1983-01-01T00:00:00.000Z",

"key": 410227200000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1984-01-01T00:00:00.000Z",

"key": 441763200000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1985-01-01T00:00:00.000Z",

"key": 473385600000,

"doc_count": 1,

"avg_salary": {

"value": 15000

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1986-01-01T00:00:00.000Z",

"key": 504921600000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1987-01-01T00:00:00.000Z",

"key": 536457600000,

"doc_count": 1,

"avg_salary": {

"value": 12000

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1988-01-01T00:00:00.000Z",

"key": 567993600000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1989-01-01T00:00:00.000Z",

"key": 599616000000,

"doc_count": 1,

"avg_salary": {

"value": 8000

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1990-01-01T00:00:00.000Z",

"key": 631152000000,

"doc_count": 1,

"avg_salary": {

"value": 10000

},

"derivative_avg_salary": {

"value": 2000

}

},

{

"key_as_string": "1991-01-01T00:00:00.000Z",

"key": 662688000000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1992-01-01T00:00:00.000Z",

"key": 694224000000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1993-01-01T00:00:00.000Z",

"key": 725846400000,

"doc_count": 0,

"avg_salary": {

"value": null

},

"derivative_avg_salary": {

"value": null

}

},

{

"key_as_string": "1994-01-01T00:00:00.000Z",

"key": 757382400000,

"doc_count": 1,

"avg_salary": {

"value": 5000

},

"derivative_avg_salary": {

"value": null

}

}

]

}

}

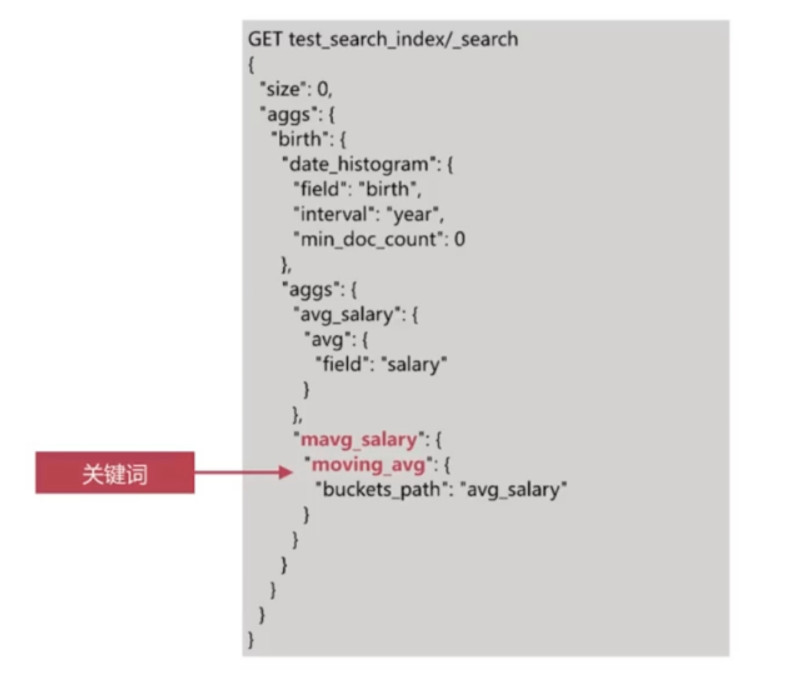

}Moving Average

计算Bucket值的移动平均值

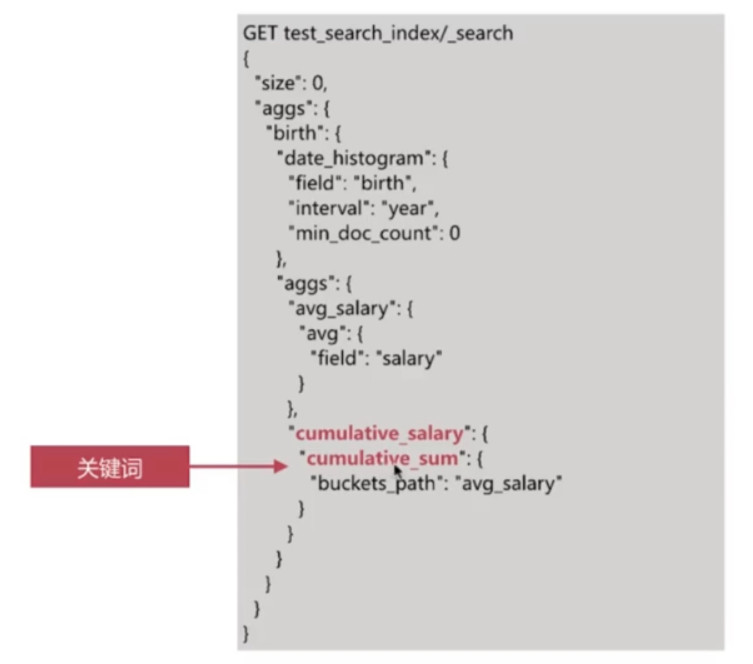

Cumulative Sum

计算Bucket值的累积加和

作用范围

- es聚合分析默认作用范围是query的结果集, 可以通过如下的方式改变其作用范围:

- filter

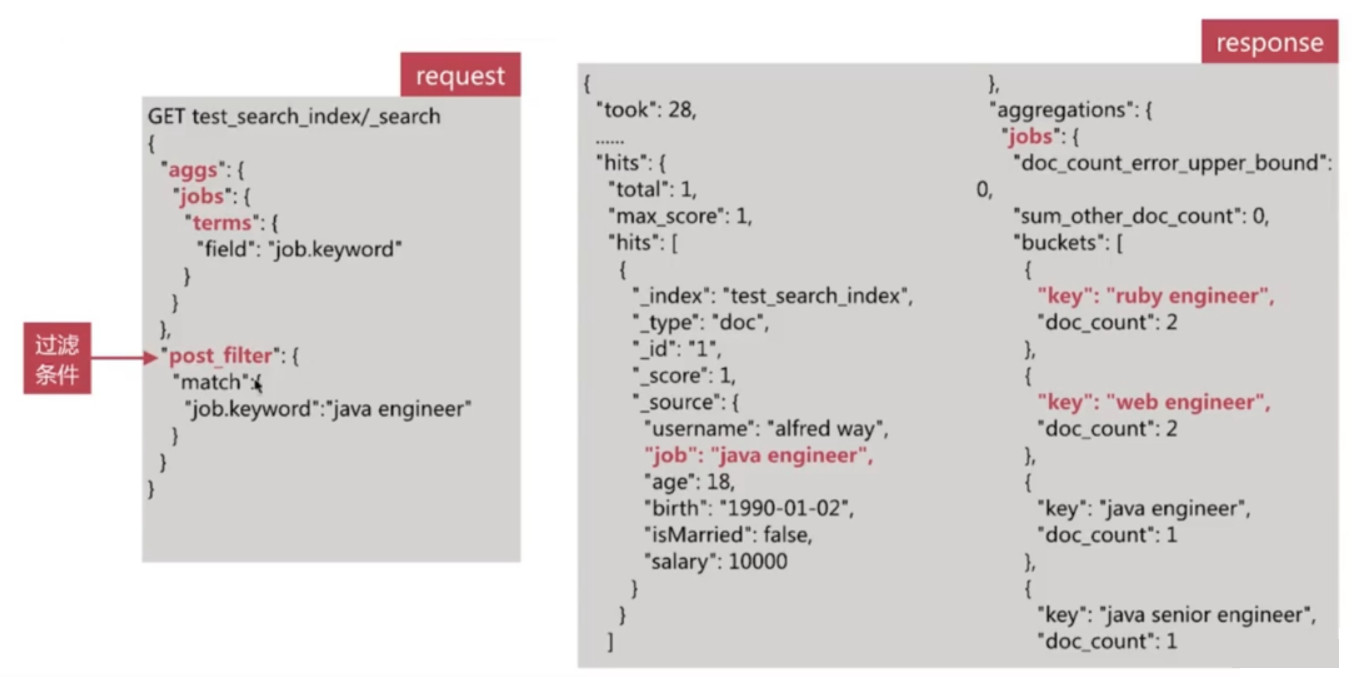

- post_filter

- global

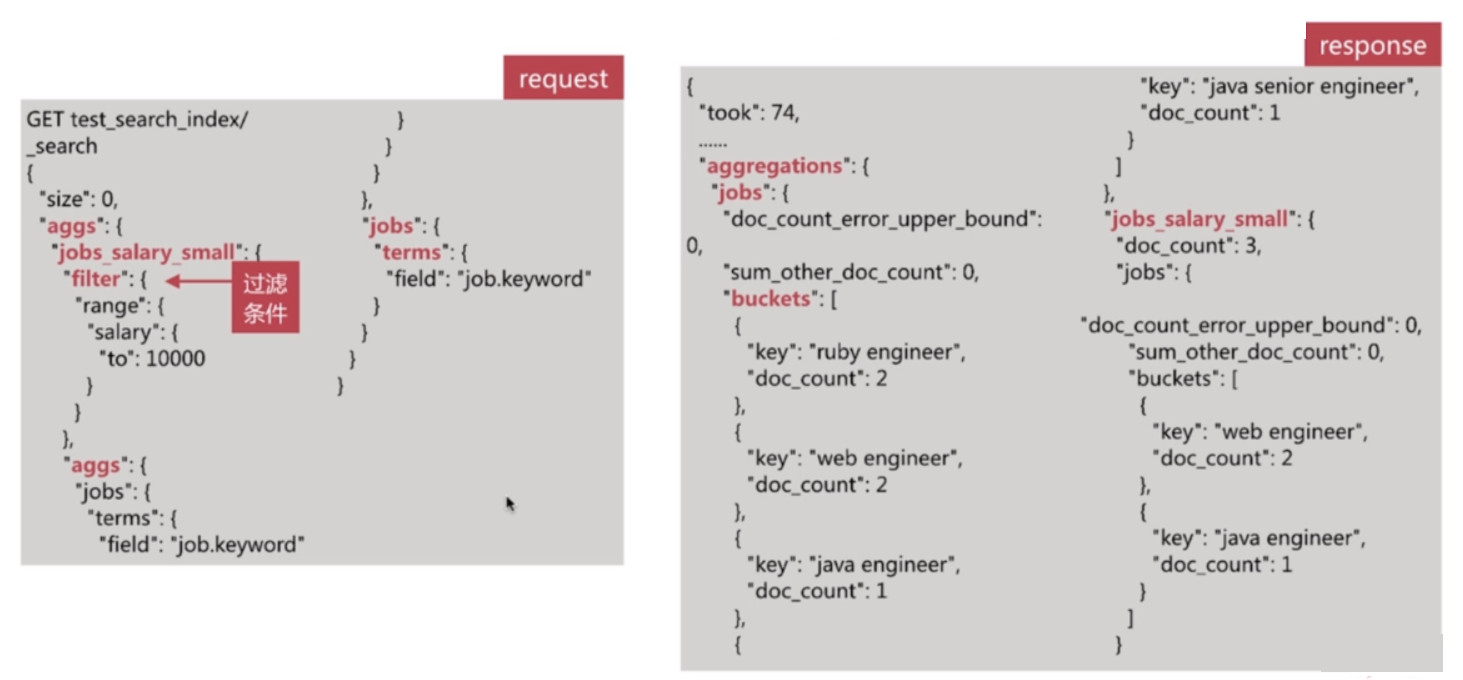

filter

为某个聚集分析设定过滤条件, 从而在不更改整体query语句的情况下修改了作用范围

post_filter

作用于文档过滤, 但在聚合分析后生效

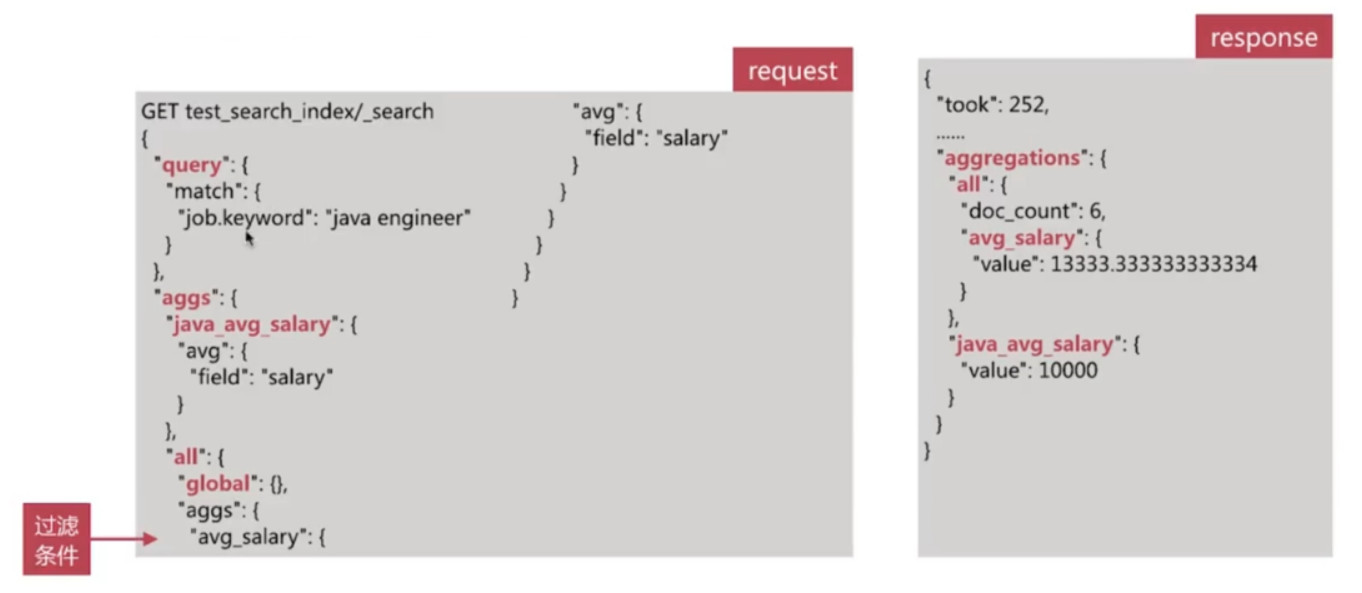

global

无视query过滤条件, 基于全部文档进行分析

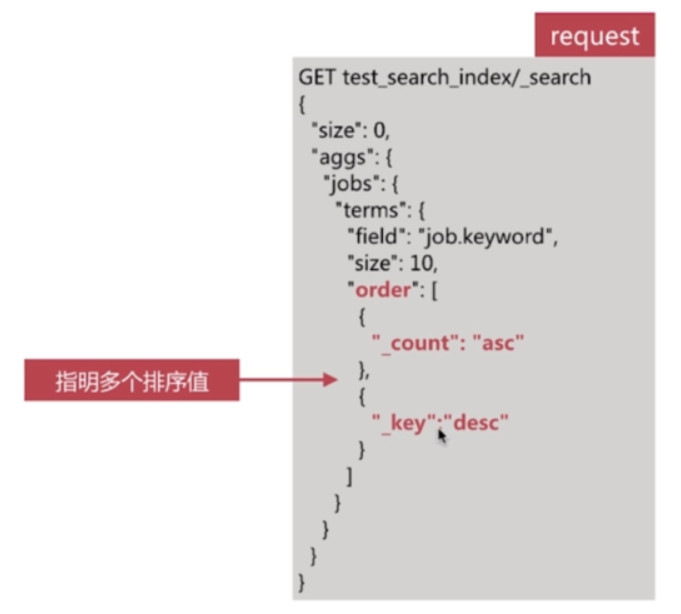

排序

可以使用自带的关键数据进行排序, 比如:

- _count 文档数

- _key 按照key值排序

例1:

例2:

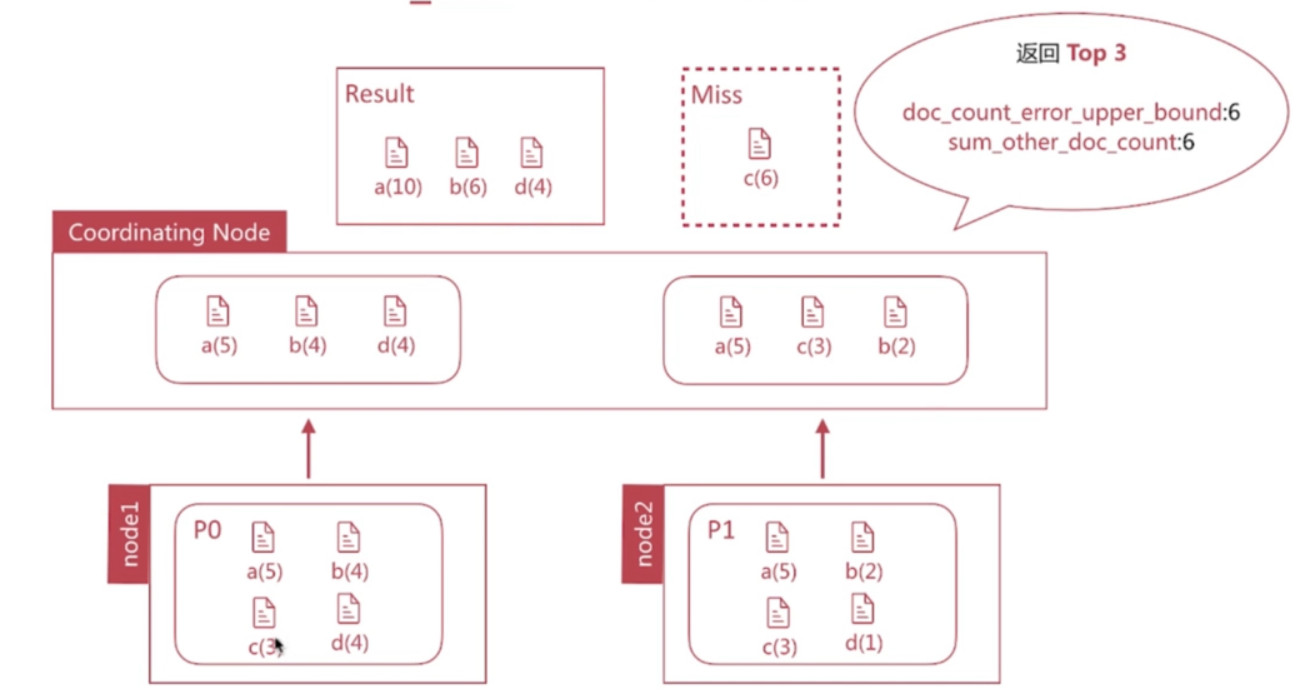

计算精准度问题

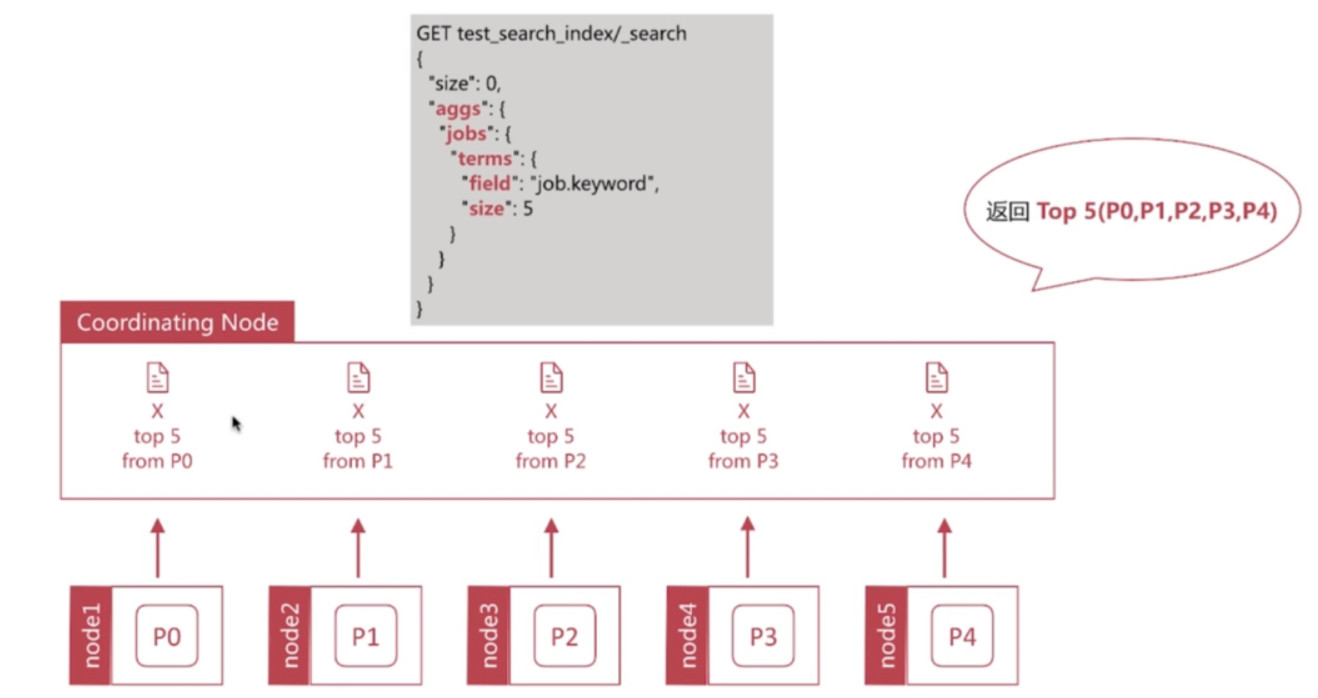

Terms聚合的执行流程

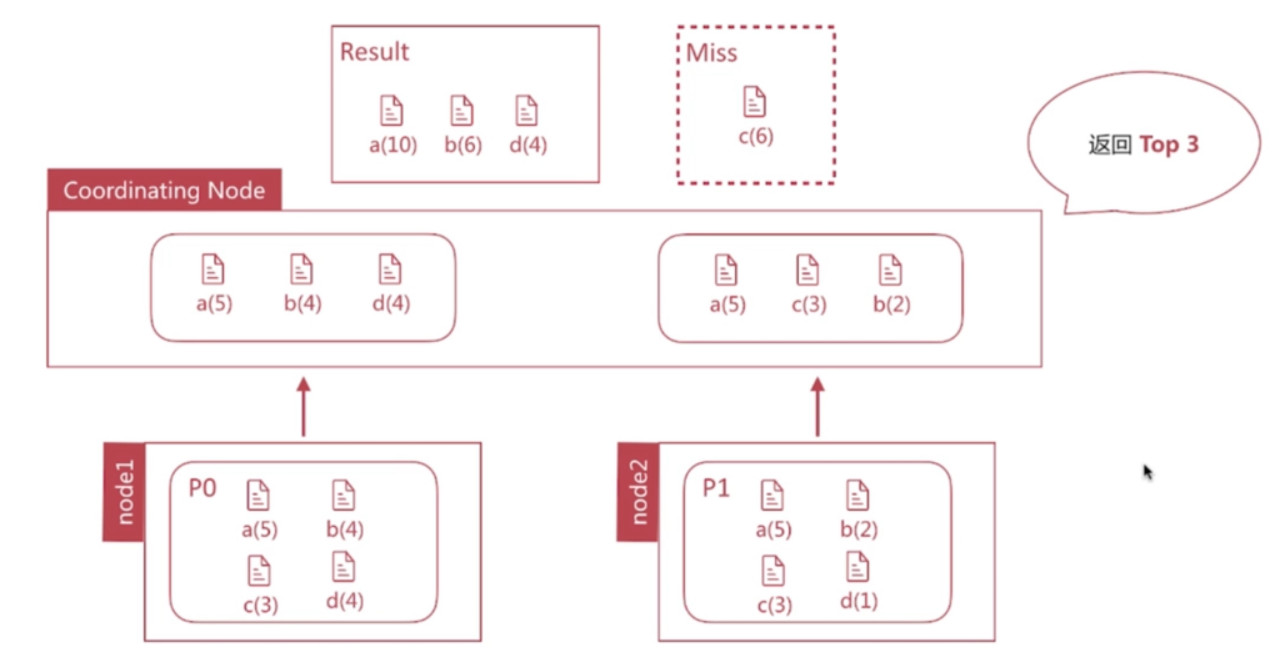

Terms并不永远准确

Terms不准确的解决办法

设置shard数为1, 消除数据分散的问题, 但无法承载大数据量

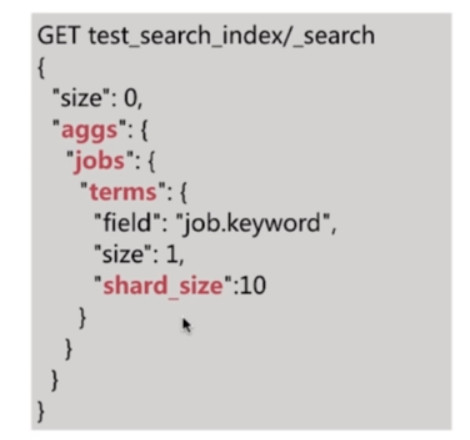

合理设置Shard_Size大小, 即每次从Shard上额外多获取数据, 以提升准确度

Shard_Size大小的设定方法

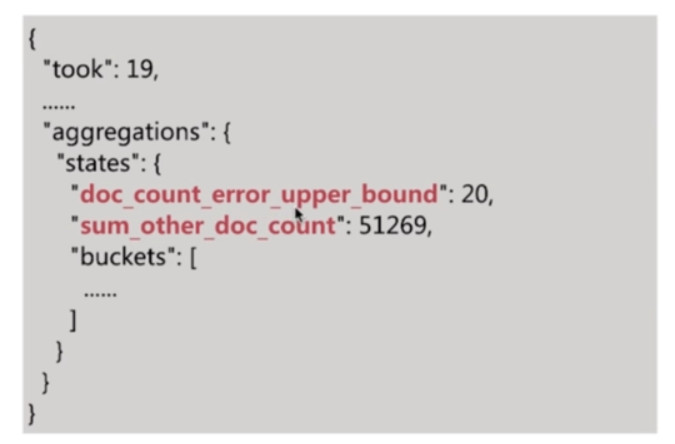

terms聚合分析结果中有如下两个统计值

- doc_count_error_upper_bound 被遗漏的term可能的最大值

- sum_other_doc_count 返回结果bucket的term外其他term的文档总数

Shard_Size默认大小如下:

- shard_size = (size * 1.5) + 10

通过调整Shard_Size的大小降低 doc_count_error_upper_bound 来提升准确度

- 增大了整体的计算量, 从而降低了响应时间

近似统计算法

在es的聚合分析中, Cardinality和Percentile分析使用的是近似统计算法

- 结果是近似准确的, 但不一定精准

- 可以通过参数的调整使其结果精准, 但同时也意味着更多的计算时间和更大的性能消耗